This research paper is written by Monir Mooghen and Maia Kahlke Lorentzen from Cybernauterne. It was commissioned by Softer Digital Futures in 2023.

Download the paper as PDF here:

Abstract

As Elon Musk took over Twitter in 2022, with the distinct goal of reducing the content moderation of the microblogging platform in the name of free speech, many users started to look for alternative social media and networks to escape the rise in hateful and toxic content. One of the places many flocked to was Mastodon, which is part of the fediverse is a collection of servers, software types and internet protocols that can communicate with each other, forming an Open Source social network.

Unlike Twitter, Instagram and other types of corporate social media, the fediverse is decentralised and cannot be bought by billionaires. It has no ads or algorithms, and is run largely by volunteer administrators and moderators.

In this paper, we have surveyed a group of 347 users and 28 moderators of the fediverse about their experiences with non-commercial, open source decentralised social networks.

We found that many users joined recently looking for an alternative to Twitter, and while they enjoyed the absence of ads and algorithms, found significant challenges with racism and a gatekeeping user culture that often made them feel unwelcome.

We found that moderators and admins largely worked on a volunteer basis, that they did not encounter a lot of hate speech and harmful content on their instances, as have community guidelines about hateful content and approval processes for new users, though the influx from Twitter had increased the moderation workload.

While moderators mostly agreed on being hardliners against outright fascist instances on the fediverse, many indicated that they found it difficult to make decisions on blocking/ moderating big instances or users they know personally, for behavior that is not outright against community guidelines, but still considered toxic or problematic.

About the research project

This research project is done by Monir Mooghen and Maia Kahlke Lorentzen from Cybernauterne, funded and commissioned by Softer Digital Futures. The research aims to collect and analyse data from a survey on experiences with user-driven social networks and user-driven admin and moderation work within the fediverse.

Cybernauterne is a Danish network of experts in cyber security and digital media that does research and offers workshops, consulting work and talks. Monir Mooghen has a background in techno-anthropology. Maia Kahlke Lorentzen has a background in media science. We both have extensive personal experience with digital community care and volunteer moderation work and moderate a Mastodon instance.

What is the fediverse?

The fediverse is a collection of servers, software types and internet protocols that can communicate with each other, forming an Open Source social network. Some of the most widely known fediverse networks are Mastodon and Misskey, microblogging software akin to Twitter, and Pixelfed, which is a photo-sharing service akin to Instagram.

Unlike their commercial counterparts, where user data is stored in walled-off proprietary servers, the fediverse exists on thousands of decentralised servers run by various administrators and communicates with each other via a protocol called ActivityPub.

The fediverse is run on thousands of decentralized servers across the globe, and they federate with each other, meaning they share user content back and forth.

The software they run, though the networks have different names and user experiences, are interoperable, therefore users across the fediverse can communicate with each other while using different software.

This means that users of different types of networks like Mastodon, Misskey and Pixelfed, can communicate freely with each other and other users. Imagine being able to see a Facebook post on your Twitter profile, or comment on a TikTok from your Instagram. This is possible on the fediverse.

Open Source moderation

The fediverse is by and large an undertaking by the Open Source Software movement, and many of the networks on there are built and run by volunteers, who are usually power-users of the networks themselves.

This also means that the task of moderation is done outside of the structures we know from commercial social media, like Meta and X. Here a set of community guidelines that details what type of behaviour and content is allowed and what isn’t, is set by the company, and enforced by a mix of internal staff and third parties undertaking the task of moderation. There have been significant critiques of both the working conditions of these moderators, the decisions and lack of transparency around moderation decisions of companies like Meta and X as well as too lax standards towards moderation of dangerous content.

A different approach to moderation is taken by companies like Reddit, who aside from setting a set of overarching guidelines, largely let the users decide on the themes and rules of their subreddits, and leave it to the individual administrator teams to enforce them. This concept has been largely followed by Facebook group functionality.

The type of moderation practice in operation on the fediverse is quite free in comparison. There is no overarching set of guidelines for how to behave in the fediverse. Each individual fediverse server, whether it runs Mastodon or Pixelfed, sets its own set of guidelines and decides what users it will allow, how many, and if it wants to kick them out.

There are servers dedicated to “free speech” with absolutely no rules and usually inhabited by neonazis, there are LGBT+ exclusive servers with a long list of behaviour and content that is allowed and not allowed. Moderation is done by the person who runs the server or a team of moderators assigned by them, not by a company. This also often means that the moderator, the one making the decisions around what to allow, who to block and who to kick off, rests with an active user of the network.

If one server decides they do not like the behaviour of the users of another server, they have the option of defederating; cutting off all ability from the users of their server to communicate with the ones on the offending one.

So how does this decentralised moderation experience work in practice? Are the users navigating to servers with certain types of rules, and how do they experience this open-source moderation? How do the (largely volunteer) moderators experience their task, and what are the challenges that come with it?

Methodology

In this project, we have created two different survey questionnaires on the free and open-source web app LimeSurvey which we have distributed through the researchers’ profiles on our respective profiles on Mastodon and through Cybernauterne’s website. The two surveys were directed at:

1) Users in the fediverse

2) Instance administrators and moderators in the fediverse.

The survey was open for responses from May 2023 to September 2023 and got 347 completed responses from the user survey and 28 completed responses from the admin and mod survey. All data has been collected anonymously as the survey does not collect personally identifiable data from respondents. All quotes used in this publication have been explicitly allowed by each respondent.

You can find the survey questions for users here and survey questions for moderators here.

Complications

The fediverse is favoured by many technically skilled privacy-aware open source enthusiasts. Therefore, early on in the research phase, it became clear that we needed to use a survey platform which allowed for more advanced options for data protection than the more conventional options like SurveyMonkey and Google Forms.

We chose the open-source service LimeSurvey, which allows for extended privacy. However, it was also quite costly, the UX and backend were clunky and took ages to set up, and the formatting of the data collected made analysing the results difficult. This meant that our work process was heavily delayed.

Many of our respondents also criticised us for the way we framed questions if they showed less than stellar understanding of the technical and political reality of the fediverse.

Creating this analysis, collecting data and engaging with users on it was not dissimilar to the overall experience of entering the fediverse as a new user; the requirement of the existing userbase for understanding the existing culture, adhering to specific social norms, having tech skills and understanding and being aligned with eg. open source values, with the added friction of clunky interfaces, technical terms and no recommendation algorithm makes getting into the fediverse a bumpy ride for anyone not overly dedicated to the experience.

User experience

Why do people join the fediverse?

In the user survey, we were interested in how the respondents got interested in creating a user within the fediverse, how they chose their instances and how they felt about moderation and instance rules. Being that the fediverse is user-moderated and the rules apply only locally, not globally throughout the whole fediverse, the rules and users are more intertwined than conventional social media sites like Facebook and Instagram, where users have little to no influence over community guidelines, user behaviour and where the system of moderation is vast, fast-paced and black-boxed.

When asked “Why did you join Mastodon - or other networks on wider fediverse?”, the users could answer qualitatively, and the responses have been categorised hereafter. Some of the answers are in several categories at once. In the order of their size, the biggest categories are:

- Elon Musk's influence and dissatisfaction with X, former Twitter/Twitter migration

- Desire for control, privacy, user ownership, open software and decentralisation

- Curiosity and interest in alternative platforms/tech options

- Dissatisfaction with existing social media platforms and their algorithms

- Alternative to mainstream platforms and commercialization and corporate media

- Friends migrated, knew someone there, or referrals to the platform

- Connecting with like-minded individuals and communities

- Satisfaction with the alternative platform in the fediverse

- Political reasons

- Wanting a safer space

Around ⅓ of respondents (32.6%) mention Elon Musk’s influence on X, formerly known as Twitter, as their reason for having joined Mastodon or other networks in the fediverse. In October 2022 Elon Musk acquired X, something which spurred controversy and uncertainty among X users and resulted in a large number of users looking for alternatives to the platform, many migrating to Mastodon. Many users in our data set complain about Twitter not being good for their mental health, not feeling safe and feeling toxic.

X has since been overrun with users and harmful content from the extreme right-wing in Musk’s neverending attempt to allow for “free speech”. A long list of controversial figures, such as Donald Trump, Jordan Peterson, Andrew Tate and Kanye West have also returned after previously having been banned. The continuous progression of X becoming a right-wing space has created a mass migration to Mastodon since Mastodon’s user interface and features are somewhat like X.

Privacy and data questions

“I don't want to be in social media moderated by algorithms anymore.

I am looking for social network.”

12.1% of the respondents mentioned a desire for control, privacy, user ownership, open software and decentralisation as reasons for using the fediverse. Similarly, 7.7% mention wanting an alternative to mainstream platforms and getting away from the commercialization of social media and corporate social media. These two categories often overlap in the responses to the question. One respondent mentions:

"I wanted to experiment with social networks that respect users instead of turning them into products.".

Such answers are frequently found in our data set showing that a high percentage of fediverse users reflect on data and privacy, wanting more control over the data they share, and similarly want transparency as to what they are giving up. The importance of knowing what data they give up, but also knowing that the data is not then owned by a big company is something a lot of users underline. Perhaps more than the general public, users within the fediverse try actively to combat online data harvesting and therefore seek alternative social media platforms to protect their data.

Choosing an instance

A popular fork of the fediverse is Mastodon, where users have to choose a specific Mastodon server, also known as instances, to sign up. The instances are connected and can interact with each other, but can also set rules that affect all users on the instance. As described, an instance can choose to block other instances so users can’t see or interact with content from those instances. Limitations can also be modified so that ‘toots’ (posts) can contain more than the standard 500 text-based characters. An instance also gives the users proximity to each other, so the choosing of an instance is an important aspect of the user experience.

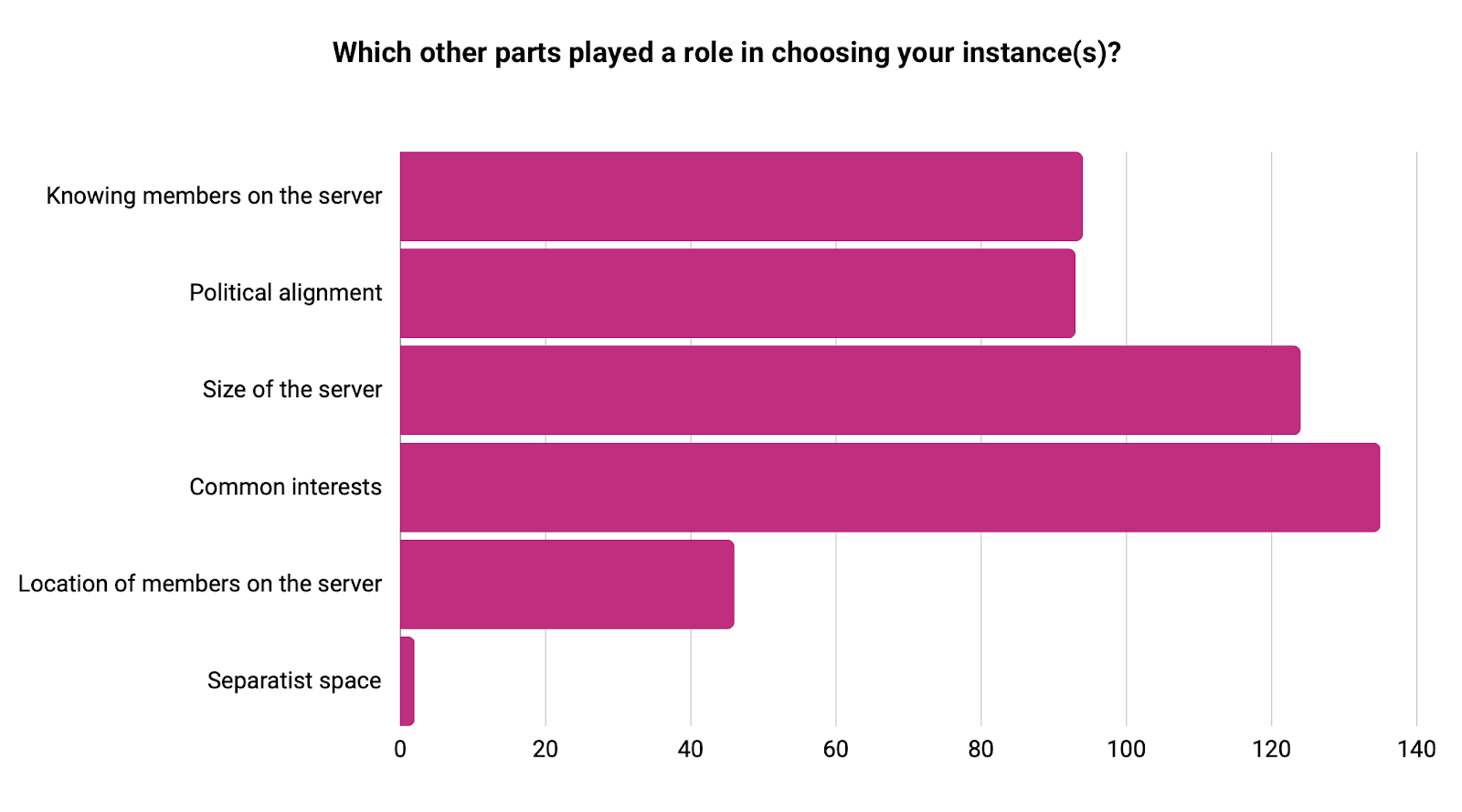

When asked, 215 respondents (62%) found the rules very or somewhat important when choosing their instance to create a user in. Other important aspects in choosing an instance were the size of the instance, having common interests with other instance users, knowing other users in the instance, and being politically aligned with other instance users. Of responses in the category “Other”, many respondents mention owning their server. In our survey, we also see many users own single-user instances, meaning they don’t have other users within the instance that they exist on. This gives the power and freedom of the instance-owner to make changes that are more global and be in total control of their data, privacy and more.

Responses from the questionnaire for the users on their experience with moderation and choosing a server. 347 fediverse users participated in the survey

Responses from the questionnaire for the users on their experience with moderation and choosing a server. 347 fediverse users participated in the surveyReporting content and mod-satisfaction

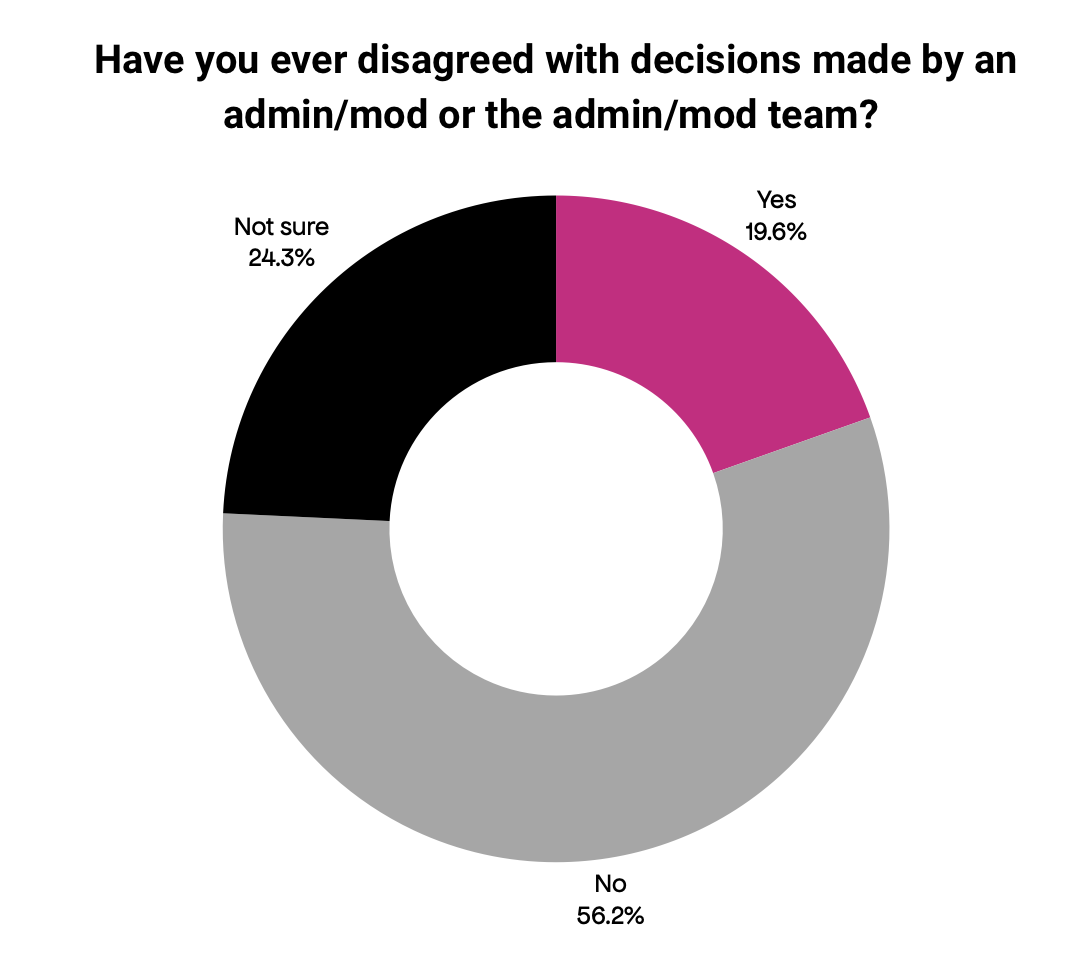

Almost 60% of user respondents have tried to report content to their instance mod/admin team before. A staggering 88.2% are either very satisfied or satisfied with the work and decisions made by the mod/admins. Almost 20% of the users have disagreed with decisions made by an admin or mod, and from the qualitative answers here, we see many different scenarios unfold afterwards.

Responses from the questionnaire for the users on their experience with moderation. 347 fediverse users participated in the survey

Responses from the questionnaire for the users on their experience with moderation. 347 fediverse users participated in the surveyA large number of users report that they end up moving instances because of the disagreements. Sometimes this can be a trial-error process until finding an instance which works for the user, or simply create their own instance.

“I did address it, but my appeal was overruled by the council of admins due to fundamental philosophical differences. That's fair game, I changed servers and got a bit more picky before selecting new ones as well.”

“I just moved to a new instance with more permissive rules.”

Other users answer that they end up having discussions with the mod team about it.

“It was a minor disagreement. Maybe even just a difference in chosen solutions. Once I shared my idea, our admin weighed the options and the critiques then made an informed decision.”

“I've talked with the admins of two of the servers I've used regarding federation decisions. Sometimes, they've adjusted the decision based on my input. Even in cases where they haven't, the interactions were conducted in good faith, and I respect the decisions they made.”

And then there are disagreements, which heavily affect user experience, and also uphold some of the same dynamics that heavily exist on commercial social media:

“I have disagreed with other instance’s admins after making inter-instance reports but never had any issues with my current instances moderation. I left my previous instance in part due to the incredibly slow speed at which the instance was addressing reports and a general feeling they weren’t taking the concerns of their POC members seriously enough”

In this case, moderation is both somewhat intransparent and unreachable. Concerns such as these should always be taken seriously by the individual mods/admins or reflected upon in the larger team, especially if those individuals lack diversity. How can we protect marginalised users on our platforms? And how do we create moderation practices that ensure safety and harm reduction on our sites?

Racism and discrimination in the fediverse

Although several users state explicitly that anti-racist moderation rules have been an important aspect in choosing their instances, 23.3% of users in our survey have either witnessed or experienced racism in their time on the fediverse. One user explains:

“The Fediverse is still too "white". I know so many BIPOCs leaving Mastodon because they experience hate and racism. Some mods want to urge them to talk about racism only behind CW. Often Mods don't react to reports (depending on the instances). Moderation has to become professional with higher numbers of users.”

As we see with commercial social media, racialised users aren’t properly protected by either human or non-human moderation tools. More often than not, leaving them alone to deal with their experiences with hatred and racism. Unfortunately, the answers from our user survey point to similar experiences within the fediverse, where user cultures and moderators can be overwhelmingly white, resulting in many answers in our data set discussing tendencies where moderators and admins overlook racism and fail to act out in protection of their racialised instance members. Some users explain:

“The fediverse has a "cute facade". But behind, many minorities experience the same hatred as anywhere.”

“As somebody who has both worked at and professionally studied social media companies, I have deep questions about what the ceiling and floor are for Mastodon's volunteer approach. But so far it both seems whiter to me than Twitter and the abuse here is significantly worse given my lesser reach.”

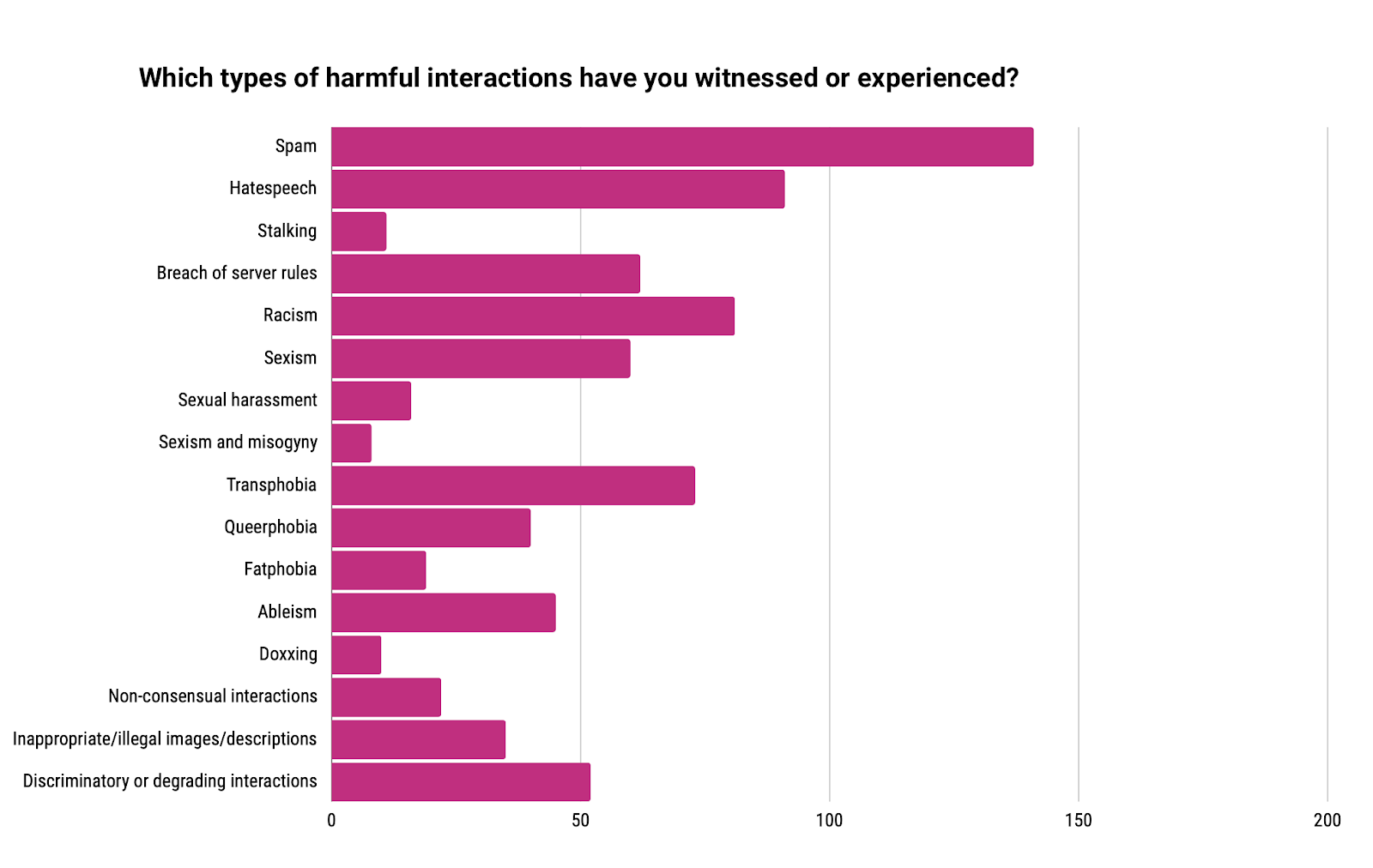

Similar answers are found throughout our data set where almost 60% of respondents answer that they have experienced harmful interactions or content in the fediverse. Aside from racism, spam (40.6%), hate speech (26.1%), transphobia (21%) and sexism (17.2%) are the most commonly experienced harmful interactions or content.

Responses from the questionnaire for the users on what type of harmful interactions they had witnessed. 347 fediverse users participated in the survey

Responses from the questionnaire for the users on what type of harmful interactions they had witnessed. 347 fediverse users participated in the surveyAlthough many users go to the fediverse specifically to flee from the negative experiences and cultures on commercial social media, these answers show that the fediverse is far from a harm-free social space. Many users still find it better than traditional social media sites, but from our survey answers, we see that it is heavily influenced by the race and gender of the users experiencing the fediverse.

Moderator and admin experience

In the survey of moderators and admins, who will be referred to as mods in the following, we focused both on why the mods chose to join the fediverse, what types of community guidelines they’d set up for their instance and how they navigated moderating.

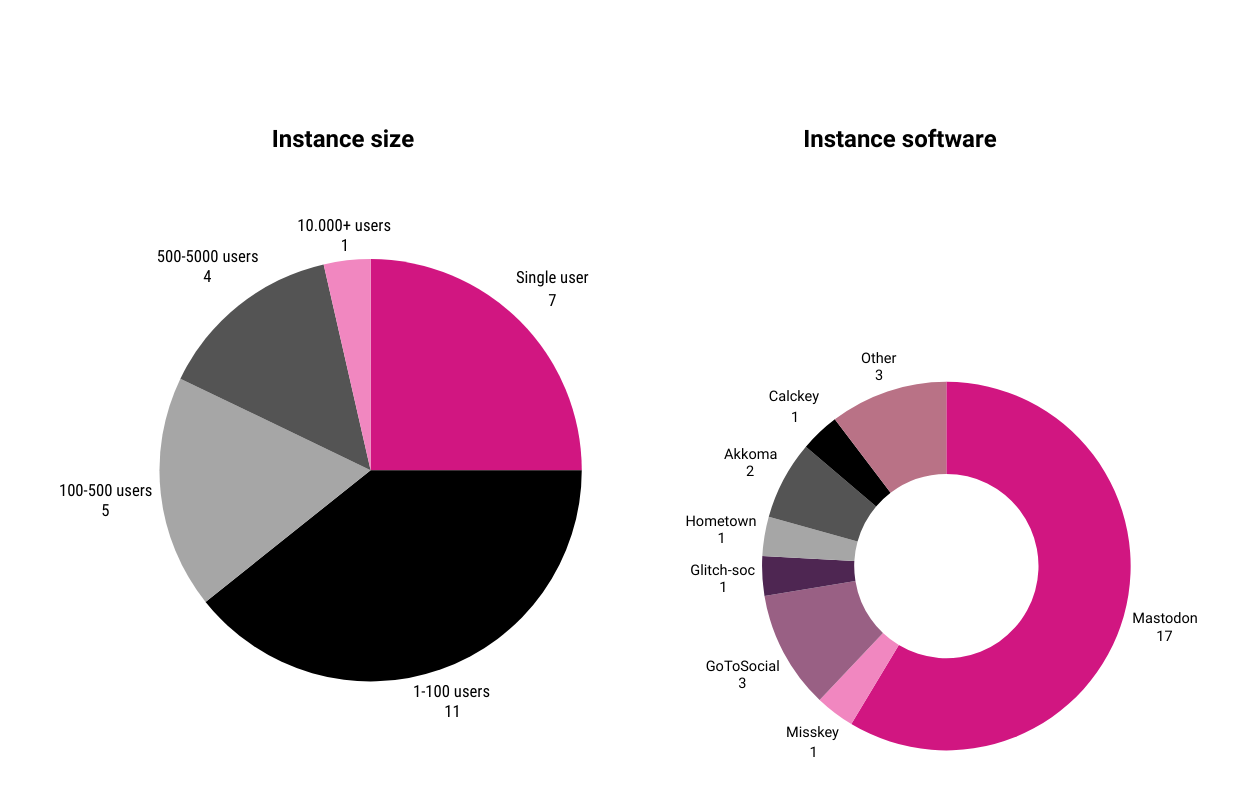

We got replies from 28 mods. 17 of them ran servers with Mastodon software, and the rest ran other types of fediverse software, like GoToSocial, Akkoma, Glitch and Hometown. See the distribution in the figure below.

Responses from the questionnaire for the moderators and admins on the size and software of the instances they moderated. . 28 admins and mods participated in the survey

Responses from the questionnaire for the moderators and admins on the size and software of the instances they moderated. . 28 admins and mods participated in the surveyThe size of the instances run by the mods surveyed is varied, from single user to one with 10.000+ users, with 18 in the single user or between 1 and 100 users category.

Most mods responding to this survey were based either in Europe (14) or the US (9). We asked the mods about their identity as a non-mandatory question. 13 chose not to respond or to indicate they thought the question was irrelevant. Others responded with multiple identity indicators. 11 replied they were white, one moderator team replied they were made up of black trans femmes. Six stated their gender as cis men, three as trans and two as queer or genderqueer. 3 replied they were disabled. Four wrote they were middle-class, two wrote they were lower class.

Like the users, many of the mods turned to the fediverse out of frustration with how X, formerly Twitter, was run by Elon Musk, especially the scaling back of moderation of hate speech and far-right content. Other reasons to join were the interest in an ad-free, non-commercial social media space, or an interest in tech and open-source software.

The mods run instances with a high degree of variation in the type of community they’re building and who they’re catering to. There are four topline categories of instances (aside from single-use servers):

- Instances aimed at friends and acquaintances, invite-only

- Instances for communities with specific hobbies and interests, eg. tabletop roleplay or furries

- Political instances: Specifically servers aimed at accommodating the radical left in specific areas

- Tech-focused instances: Like open software enthusiasts or tech workers interested in the fediverse

Most of the instances were either invite-only, closed for registration or had some form of approval process in place. Only two had completely open registration, where new users could sign up without any barriers.

17 of the respondents spend 2 hours or less per week on moderation tasks. The larger instances created more work for the mods, with the mod of the largest instance spending between 20 and 24 hours per week on moderation. Out of the 26 instances, only two indicated that their mods got paid for some of their time. The rest work for free.

One of the challenges and allures of the fediverse is the ability to choose an instance with moderation practices and community guidelines that suit your needs as a user. We asked the mods which types of content they allowed in their instance guidelines, and how they decided on them.

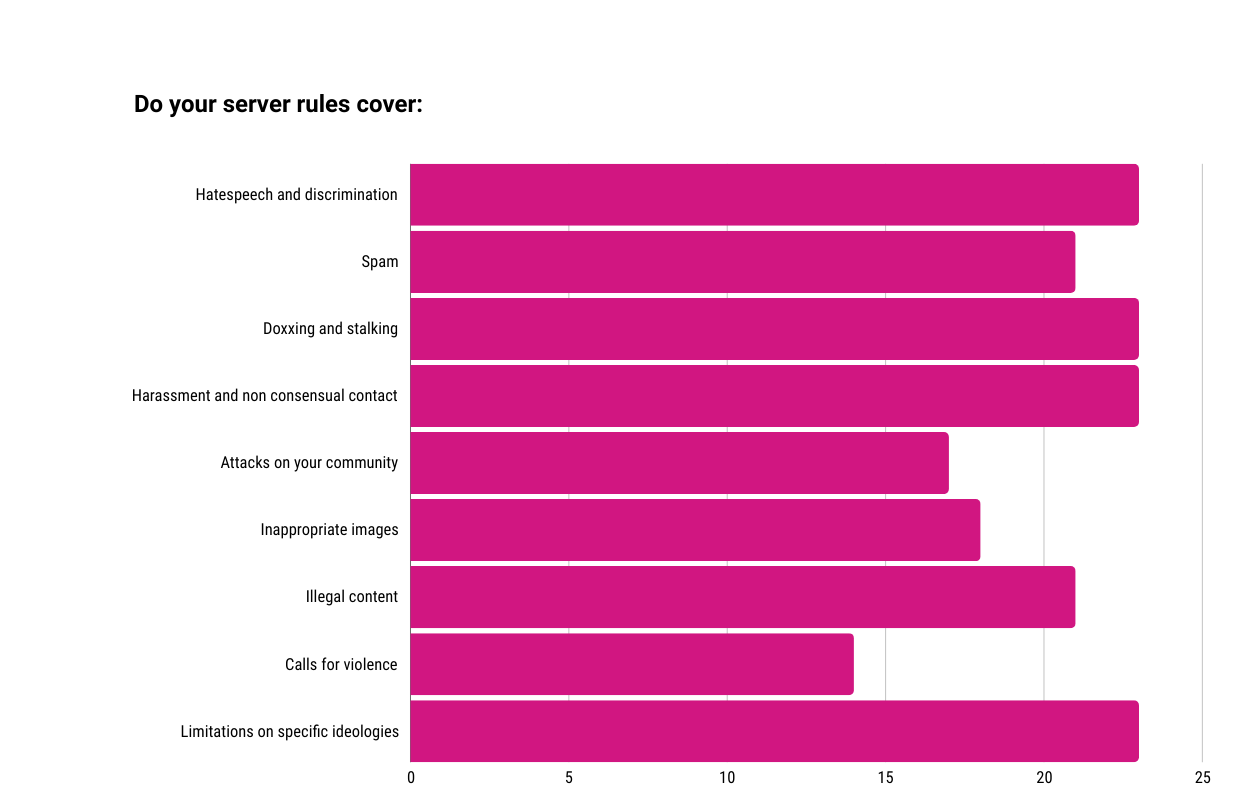

The majority of instances had rules that prohibited hate speech, spam, doxxing and harassment. Furthermore, content deemed illegal (like CSAM) is also specifically mentioned in most community guidelines. Many servers allow NSFW content, a few ask for it to be specifically marked.

When asked how they created their community guidelines, 19 out of 28 responded that they were inspired by other fediverse instances. Only five mods responded that they got input for their guidelines from their users. We also asked the mods if they shared moderation decisions, ie. banning users or defederating other instances with their users, which few indicated they did.

The responses indicate that the admin and moderation practices happen at the discretion of the mods or the admin group and that while the needs of the community may be taken into account, there is not an extensive practice of getting community input for moderation decisions.

Responses from the questionnaire for the moderators and admins on community guidelines on the servers they managed. 28 admins and mods participated in the survey

Responses from the questionnaire for the moderators and admins on community guidelines on the servers they managed. 28 admins and mods participated in the surveyThere is no automated way of removing harmful content on the fediverse. An instance mod can set up certain filters, furthermore users can report harmful content which a mod can then remove, limit or suspend via their administration interface. Furthermore, the administration panel allows a mod defederate entire instances, meaning their users cannot interact with an instance user group at all.

We asked the mods if they found the tools at hand to be adequate with 7 responding no, 6 said somewhat and 9 responding yes. A couple of specific tools and functionalities suggested were:

- A way for users to white-or greylist people

- Better tools to share block- and defederation lists between servers

- The ability to time-limit suspensions, defederations and blocks

- A way to communicate directly with users who are either reported or make a report. For instance a kind of “admin inbox”.

- The ability to filter out specific types of content, eg. by hashtags or location

It was also noted, that “Mastodon lacks moderation tools at the user-level (like the possibility to turn off comments, for example)”

Moderating harmful content and controversies

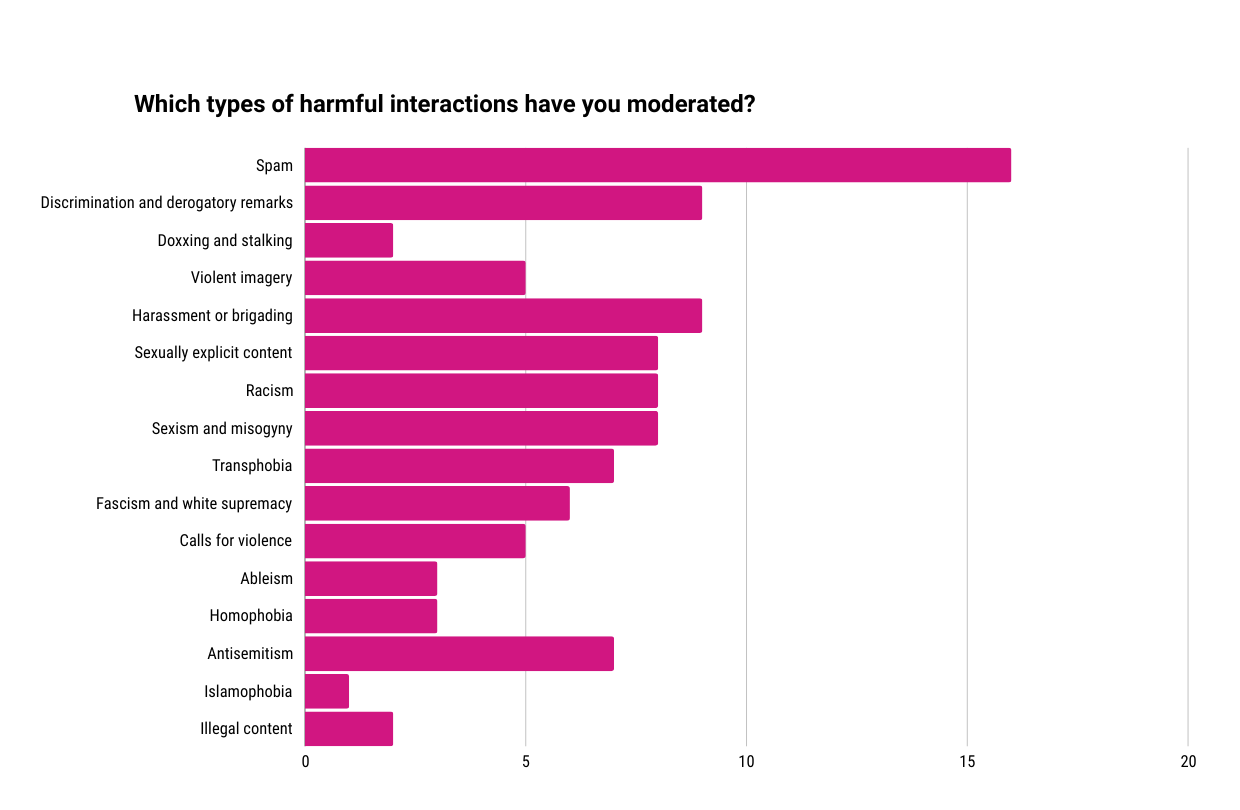

We asked the mods what types of harmful content they had experience moderating. Under half responded they had experience removing hateful or illegal content. Mostly, it seems, mods had experience removing spam. Many responded that they had no experience having to moderate or remove any content from their own instances yet.

Responses from the questionnaire for the moderators and admins on moderation experiences of harmful interactions. 28 admins and mods participated in the survey

Responses from the questionnaire for the moderators and admins on moderation experiences of harmful interactions. 28 admins and mods participated in the surveyThe fact that the mods have limited experience moderating racism aligns poorly with the experiences of the users surveyed for this paper. 23.3% of users have experienced or seen racist content in the fediverse where only. 8.1% of mods and admins have moderated those types of interactions. Whilst the users and mods from our survey are not all from the same instances, there is a clear indication that issues like racism are wide spread enough for almost ¼ of the surveyed users to have seen or experienced it.

We also asked the mods which types of moderation decisions had resulted in controversies or disagreements with their users, and which ones they found to be more difficult.

Some of the moderation decisions that resulted in controversy were around when mods decided to block other instances or users, for instance:

“Some (popular) members of the tech community are fascist, and our users occasionally ask us to unblock them.”

“Yes, we had disagreements on the federation level, about servers that we suspended”

“We limited an instance with a mix of good and bad press persons. users have tried to guilt us into un-limiting”

“Lots of white queers on other servers trying to excuse anti-blackness among their friends, defend them against being defederated. This group is bold and tends to directly message administrators to appeal against decisions made to block sources of racist harassment on other instances. Even with small servers not directly involved.”

The issue here all relates to the practice of defederating from other fediverse instances in response to either poor moderation practices, perceived poor or harmful behaviour from their users or a disagreement on core values. The question of whether this is an individual user or an admin task to make is a contented one across the fediverse. Recommendations on what instances to block are often shared on the fediverse under the hashtag #fediblock. The tag was created by users Marcia X and Ginger, to help instances share recommendations on instances with toxic or harassing user groups, that could be preemptively blocked to keep them from harassing fediverse users.

The decision to defederate from larger instances, with popular or prominent users, will often result in heated debates between both mods and user groups. Similarly, many mods responded that choosing when to defederate from other instances was the moderation decision they personally found to be the hardest, not in the case of outright far right or harassment instances, but with instances with a mixed user group where the violations were in a greyer area.

“Several instances have become "too big to block" (aka, mastodon.social, hachyderm, etc); which limits our abilities to defederate from places that allow and accept extremely inappropriate behaviour.”

“I'm deeply aware - and have even written about - how site defederation is key to the fediverse's use of the "nazi bar" phenomenon against nazis and with actual far-right it's not an issue. But on various news sources, it's a tougher call. There are sites I would prefer to defederate completely where I go with silence instead.”

“Defederation of instances is the hardest part of administration. Although defederating "known-bad" instances is simple, there are a few cases of instances who end up on blocklists due to bad behaviour of their administrators, and whose users end up not being aware of what is happening.”

According to the responses from moderators and admins to our survey, moderating instances on the fediverse is not too time-consuming or emotionally draining. A lot of harmful and downright illegal content is either preemptively blocked, or does not find its way to the instances because users have to be approved before sign-up, and usually sign up according to values and guidelines on the server.

However, in periods of heavy growth or migration from other platforms, like when Elon Musk bought Twitter in 2022, 14 of the 28 responded that the workload increased, for many quite dramatically.

“We completely shut down all new accounts during the heavy migration phases.”

“It has massively increased our workload, in good ways and bad. “

“Yes, we had a sudden influx of users and we experienced a growth of federation links with other instances. That obliged us to acquire hardware and impacted our moderation workload internally and externally”.

For other mods in the survey, Musk’s takeover of Twitter was the very reason they started running an instance on the fediverse.

The most difficult moderation decisions are “grey area” instances when people who are part of an established community, or suspending local users, some of whom the mods may know personally, and the reports relate not to black and white violations.

“Moderating decisions where I know the person pretty well. Like a regular poster venting frustration about capitalism, but uses some kind of shocking violent language.

“Well established members being reported by others on respectable instances for ideological disagreement”

This highlights the issue of volunteer moderation done in established communities. Unlike moderators of commercial social media, who are anonymous and adhere to a policy, moderators of the fediverse are volunteers and often embedded in the subculture, community or organisation that the instance is established for. This increases the expectations of the community, as they will be held personally accountable for their decisions on eg. defederating from other instances or blocking individual users. Moderation decisions, or lack thereof, are also sources of heated discourse throughout the fediverse, and sometimes result in defederation and conflict with other admins and users.

Summary: User cultures, racism and gatekeeping

Mainstream social media are built on a growth paradigm, and all of their updates and developments can be understood and explained through this lens. They aim to Lure over power users and make it easy for people to invite friends, in order to grow their user base. They create content algorithms and retention mechanisms, so people stay on the platform longer and they grow eyeballs and add revenue.

The fediverse is not run by a tech startup and has no founder, and is run by a different paradigm. Many of the users who got on board with the fediverse prior to the great influx post-Musks takeover of Twitter, were already in tech-circles, active in open source communities, or looking for specific subcultures on the federated internet. They were willing to learn to navigate new tech to do so.

Some parts of the fediverse, be it specific instances or users, are vehemently anti-growth and prefer the slow organic buildup of the fediverse, as well as the friction of both the on-boarding- and user experience, like having to navigate choosing an instance and using a web client or an app, finding people to follow and content to read without an easy search function and a content algorithm. Many users and instance admin see this a feature, not a bug to be fixed.

The influx of the many new users trying out the fediverse, particularly Mastodon, did not sit well with all of the users of the fediverse. Some especially found the complaints from new users about the user experience and lack of Twitter-like functionalities, as well as lack of understanding of pre-existing culture tiresome and frustrating.

Venting this frustration at newcomers, however, also has a certain gatekeeping effect. Along with having to navigate choosing a server, let alone understanding what a server is, and navigating new norms around posting (eg. the widespread use of content notes on the fediverse) for someone who has been used to social media like Twitter, newcomers where often scolded for not understanding “the culture” that made the fediverse special.

This was frequently mentioned by the users we surveyed;

"Honestly, the most negative interactions I've had on the platform myself have been with Fediverse Zealots — people who express derision for people using commercially-funded services and seem very gatekeeper-y about Mastodon in general. I read the term ""petulant nannyism"" and it honestly fits."

"I believe the overly technical nature and high level of gatekeeping between instances preventing Mastodon's growth as a viable alternative to Twitter. Simply put: most of the public does not have a deep involvement with the nuts and bolts of their social media services, and has no desire to."

The survey of the admins and moderators did not show the same level of reflection around gatekeeping and exclusionary cultures. This indicates a blind spot for moderators and super users of the fediverse.

Making the fediverse a place that can accommodate the many people who are tired of corporate social media, have experienced harassment or are looking for the kind of community volunteer-driven social media can provide is a worthwhile goal. It’s a key motivating factor for creating Mastodon, and as we can see from the survey, a motivating factor for mods and users alike.

However, if the people already on the fediverse want to invite growth outside the groups that existed before the Twitter influx, the mods and admins need to grapple with the exclusionary part of the culture in their instances.

Many people are currently seeking social media alternatives and many have found it on the fediverse. However, for many, the fediverse is still too technical, too clunky, too white and too exclusionary in its culture to make a welcoming online home and a sustainable alternative to big tech.

As the fediverse is decentralized in its nature, these issues cannot be tackled by a central body. Rather, whats needed is a culture shift, which can only happen if the current power users of the fediverse realise the necessity, their own complicity and are willing to help push for a shift where newcomers, non-techies and POCs feel more welcome upon entry.

Monir Mooghen & Maia Kahlke Lorentzen

Commissioned by Softer Digital Futures

October, 2024